Latest Advancements in NLP on the Edge: A Guide forTech VCs

Beyond the Cloud: NLP's New Frontier in Edge Computing

By Carl DeSalvo Date Published: January 24, 2024 7 min read

We are undeniably in the midst of an AI revolution, with Natural Language Processing (NLP) solutions being deployed across every industry to drive efficiency, insights, and user experiences to new heights. However, much of the transformational potential of NLP remains untapped. The constraints of cloud-dependent systems today hold back the pace of innovation and adoption. This is precisely why NLP on the Edge represents such monumental opportunities for startups and investors alike.

With intelligent processing directly on edge devices, from sensors to smartphones to connected machines, NLP can be embedded seamlessly and at scale while overcoming cloud limitations like latency, connectivity, security, and operating costs. The resource efficiency of on-device inference also allows for innovative applications that were not previously possible.

As an early-stage tech VC, keeping your finger on the pulse of the latest developments in NLP on the edge is crucial to spot the next generation of high-growth startups. The edge AI sector is evolving extremely rapidly, with new technological breakthroughs constantly pushing the boundaries of what can be achieved. I will explore some of the most important advancements shaping the future of transformative NLP solutions.

Specialized Hardware Unlocks Cutting-Edge Capabilities

Advances in AI model architecture and neural networks grab most of the headlines. However, innovations in the underlying hardware that powers on-device NLP are just as vital to unlocking new capabilities. The tight integration of software and hardware is key to overcoming the intense computational demands of advanced NLP on resource-constrained edge devices.

As an investor, assessing startups' innovations in proprietary chipsets and system architectures specifically optimized for edge inference gives key insights into their ability to achieve speed, accuracy, and scale. The success stories of edge AI leaders like Graphcore, SambaNova, and Cerebras demonstrate the immense value creation potential of purpose-built hardware. Note that these companies focus on specialized hardware for servers and cloud-based solutions.

The market for specialized hardware deployed on edge-based devices has yet to be successfully addressed. Currently, the edge-based AI market is being addressed with standard microprocessors. This is why companies like PIMIC Inc. are so important.

Solutions like PIMIC's are set to revolutionize the AI market by significantly reducing the cost of adoption for edge-based NLP products. These advancements improve power, performance, and latency as compared to traditional architectures. Additionally, a focus on ease of use and ease of adoption will play a pivotal role in accelerating market penetration, as these factors are crucial for the widespread acceptance and implementation of NLP technologies. This combination of affordability, performance enhancement, and user-friendly design positions PIMIC's solutions as a driving force in the expansion of NLP applications.

Specialized hardware for edge-based AI deployment is ready to explode; underestimating the importance of hardware tailored for edge NLP workloads is a common pitfall. In fact, the global market for AI semiconductors alone is projected to explode to $91 billion by 2025. (1)

Secure On-Device Processing Drives Adoption

While performance metrics such as speed, capacity, and energy use are very important, data privacy and security are the biggest issues preventing the widespread use of NLP today. Due to major security breaches harming trust among consumers and businesses, it's becoming more and more essential to process data directly on devices instead of elsewhere.

This is why users of applications dealing with medical, financial, and social issues demand their data be processed locally and not in the cloud. Europe, for example, has a strong resistance to any voice data being processed in the cloud for all applications.

Evaluating a startup's approach to operations entirely within the secure confines of the end nodes rather than the cloud provides insight into their readiness for deployment at scale.

Most efforts still struggle with tradeoffs between precision and privacy. Architectural innovations like process-in-memory provide verifiable and confidential computing safeguards without negatively impacting utility and have the potential to accelerate NLP adoption exponentially.

Startups addressing edge-based NLP processing, focusing on accuracy, privacy, low cost, ease of use, and ease of integration, are certainly worth tracking as this sector of the AI semiconductor market has yet to be conquered and will represent a very large opportunity once it has.

Tight Integration Between Edge and Cloud

While edge intelligence eliminates many limitations of cloud dependency, it is not a wholesale replacement. The coordination and synergy between cloud and edge environments is critical for delivering positive outcomes at scale. Modern enterprises demand fluid orchestration where cloud resources amplify the capabilities of edge nodes.

When evaluating startups, analysis of their hybrid deployment architectures and fast data transfer frameworks provides perspective into the sophistication of their strategies. How seamlessly can their edge NLP integrate into existing on-premise devices and environments?

Today's solutions should have adaptable use models instead of just focusing on 100% decentralized applications. For example, using mixed algorithms that process sensitive parts on local systems and use cloud resources for suitable tasks can improve results. Checking how their data is combined and managed can show if they are ready for complex business needs.

Data Augmentation Techniques Launch Viability

While advances across models, hardware, and architectures unlock new functionality, data remains the fuel that powers AI algorithms. Startups able to synthesize or amplify limited local data through various augmentation techniques possess an extreme competitive advantage.

In edge environments where labeled real-world data availability remains extremely scarce, innovations in simulation, normalization, and composability are crucial.

For example, Generative Adversarial Networks (GANs) are a type of machine learning framework used in the field of artificial intelligence. In a GAN, two neural networks are pitted against each other. One network, known as the "generator," is tasked with creating data that mimics real-world data, while the other network, known as the "discriminator," tries to differentiate between real and generated data. The goal of the generator is to produce data so convincing that the discriminator can't tell it apart from genuine data. This process leads to the generator improving its ability to create realistic data over time. GANs are particularly noted for their use in generating realistic images, but they're also applied in various other areas of research and development.

Generative networks that effectively mimic target data allow effective on-device learning without massive datasets. Similarly, virtual sensors and digital twin technology show immense promise to overcome data bottlenecks.

Look at startups' unique methods for improving, creating, and expanding data, not just the basic first steps.

Progress in few-shot learning - a method where a model is trained to recognize patterns or make predictions with a very small amount of data as opposed to traditional methods that require large datasets.

Clever Sampling - the intelligent selection of a small subset of data that is still representative of the whole, allowing the model to learn effectively from limited information, along with adversarial enhancement (described above).

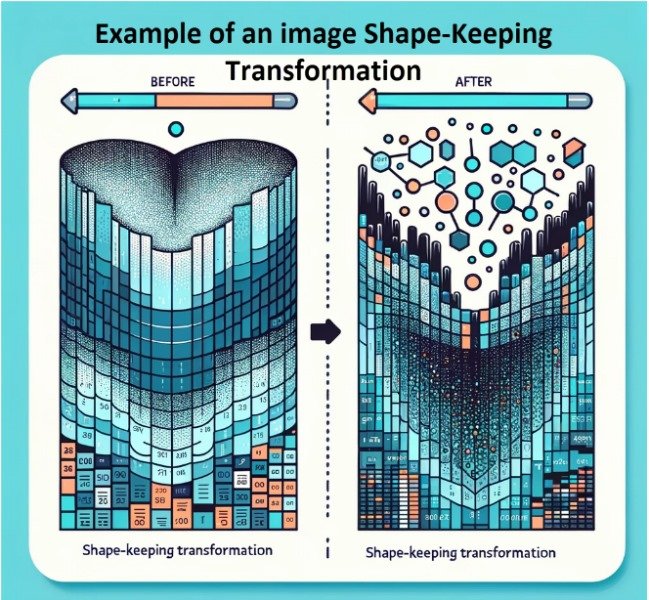

Shape-Keeping Transformations - data transformations that maintain the essential characteristics or patterns of the data, even as it's being altered for training purposes.

Example of Shape-Keeping for Images

All offer ways to achieve top performance without needing big data sets, which may not be available and are most likely very expensive. These methods significantly change advanced NLP solution accuracy and how they are created and used.

Remember, hardware architecture is the key to unlocking advanced system parameters and performance, and data is the key to developing the best model for a given application. Both are needed to create a winning AI solution that will dominate any given market.

The Next Wave of Innovation Awaits

Edge computing, 5G networks, augmented reality, blockchain, robotics, and quantum technologies are all primed to intersect over the coming decade, culminating in paradigm shifts we cannot yet fully anticipate. However, one certainty among all this disruption is the ubiquity of natural language as the definitive interface through which humans will interact with the machines powering the future.

NLP on the edge sits right at ground zero of this transformation, unlocking capabilities at scale while preserving trust. I hope this guide has shed light on some of the core areas powering the latest advancements today. By deeply educating yourself on these emerging trends and identifying the startups pushing the envelope early, you position yourself to fund the changemakers of tomorrow. The next wave of innovation awaits – are you ready to ride it?

Footnotes

End

I hope you enjoyed this topic. If you have a suggestion for a new topic you would like me to include in a future issue, please Click Here to submit your request.

All suggestions are appreciated. Thank you.

Author’s Bio

Carl DeSalvo, an alumnus of the University of Illinois with a Bachelor of Science in Electrical Engineering (BSEE), has established a distinguished career in the Electronic Design Automation (EDA) industry. His professional journey includes significant roles at renowned firms such as Cadence Design Systems, Synopsys Inc., and Mentor Graphics, as-well-as pivotal contributions to various emerging startups. Carl's expertise expanded globally during a three-year tenure in Europe, where he played a crucial role at Cadence Design Systems, supporting backend ASIC design for some of the region's largest electronics corporations.

On returning to the United States, Carl seamlessly transitioned into Sales and Marketing, demonstrating his versatility and business acumen. This phase of his career culminated in the founding of EDATechForce, LLC, a sales representative firm that, over a decade, partnered with more than 20 companies, benefiting from Carl's leadership and sales strategies.

Currently, Carl is the Vice President of Business Development and Sales at PIMIC, Inc., an AI startup at the forefront of designing innovative silicon solutions for efficient neural network architectures. His extensive experience and dynamic approach continue to drive growth and technological advancements in the AI industry.